11 things you probably didn’t know about Apple’s Photos app

The new Photos white paper is well worth a look…

Apple’s newly published privacy white papers reveal a great deal of interesting insights into how its privacy tools work, but the report detailing how Photos works is packed with things most of us probably didn’t know. Here are 11 things you probably didn’t know:

-

Learn how your machine learning is learning

I think most Photos users are aware that the app uses machine learning to figure out things like content, image quality and more, but the report reveals a whole host of different considerations the ML makes.

Not only does it identify objects, but it also analyses things like light, sharpness, color, tuning, framing and more using its on-device neural network.

And while it does so it also verifies image quality using similar attributes in order to work out what the subject of an image is and which images in your collection are actually the best.

Justin Titi displays intelligently curated photo albums in iOS 13.

2. The Ai is fast

Apple says that scene classification in Photos can identify more than a thousand different categories, and stresses how quickly it manages to do so.

That’s why an iPhone XS device can scene classify a single image un under 7 milliseconds with the chip’s Neural Engine processing over 11 billion operations in that time.

3. Apple uses supervised learning

Apple has confirmed that the AI it is using for its image intelligence uses Supervised Learning.

That’s typical when developing image intelligence, as it lends itself to this kind of analysis of large data sets. Unsupervised and Reinforcement Learning are alternative ML systems, but those aren’t used in this.

How it works for Photos is that the “Scene classification algorithm is trained by providing labeled pictures, such as images of trees, to allow the model to learn the pattern of what a “tree” looks like,” Apple says.

Apple’s made Memories much smarter

4. The magic happens at night

Apple knows you need to use your smartphone all day, which is why the Photos app on iOS and iPad OS curates your new images overnight when your device is connected to power.

This is so the process doesn’t use up all your battery when you are in motion.

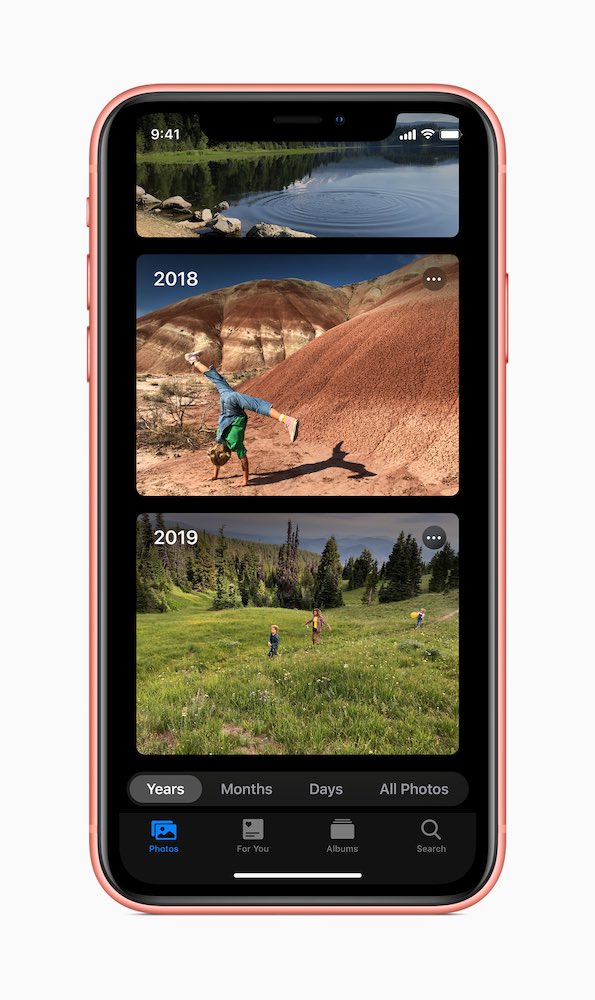

5. The views are smarter than you thought

Apple offers a range of different views.

These are smart.

Days intelligently hides screenshots, screen recordings, blurry photos, and photos with bad framing and lighting.

Photos also uses on-device, pretrained models that identify shots with specific objects, like photos of documents and office supplies, and marks them as clutter. Oh, and if you’ve ever wondered, Photos is indeed attempting to choose the best images from your collections using this AI.

Tim Cook stands in front of an image of the new iPhone 11 Pro camera

6. Some things you won’t see

Days automatically hides: Screenshots, Screen recordings, Blurry photos, Photos with bad framing and lighting, Whiteboards, Receipts, Documents, Tickets, Credit cards.

7. Some things you’ll see more often

Do you often take images of the same person?

Photos spots this and surfaces those images more often in your collection. It also figures out where you took images – if you go to a gig then you’ll see more images taken there in Day view on the assumption that these will be interesting to you, for example.

(And images you Favorite will also be higher priority).

See, there’s your keys!

8. The Photos Knowledge Graph

“Photos analyzes a user’s photo library, deeply connecting and correlating data from their device, to deliver personalized features throughout the app. This analysis yields a private, on-device knowledge graph that identifies interesting patterns in a user’s life, like important groups of people, frequent places, past trips, events, the last time a user took a picture of a certain person, and more.”

9. What is: Semantic Segmentation?

Photos uses an advanced segmentation technology to locate and separate the subject from the background of an image.

In iOS 13, the tech can identify specific facial regions, semantically allowing the device to isolate hair, skin, and teeth in a photo.

This also enables the device to understand which regions of the face to light, like skin, while determining which areas, like beards, need to be preserved when simulating studio-quality lighting effects across different parts of the face. It is one of a range of technologies used in Portrait mode.

10. What about Portrait Lighting?

To develop Portrait Lighting, Apple designed studies with studio photographers.

In one study, Apple developed a 360-degree environment map of studio lighting scenarios to radiometrically measure the real position and the amount of light emanating to the subject from light sources in all directions.

11. Private lives

All of this analysis, including scene classification and the knowledge graph in Photos, is performed on photos and videos on the user’s device, and the results of this analysis data are not available to third parties or Apple.

Additionally, this on-device work is optimized for Apple devices. For example, when a user edits a photo or video, the entire editing process is run on device and optimized for the A-series chips and GPUs in Apple products.

Apple doesn’t access user photos and doesn’t use them for advertising or for research and development. On-device analysis, like the user’s knowledge graph, isn’t synced or shared with Apple.

Want more of this? Read the white paper which will be made available here.

Please follow me on Twitter, or join me in the AppleHolic’s bar & grill and Apple Discussions groups on MeWe.

I bought the iphone 11 with only the 2 cameras by mistake, but the photos are an improvment on the X.