3 ways Apple technologies will transform future healthcare

There’s some speculation Apple is working to introduce some as yet unknown yet regulator-approved digital health sensors in a future iteration of Apple Watch. While we don’t know what these will do, it’s reasonable to think they may offer non-invasive ways to recognize diabetic symptoms as similar systems are beginning to reach market today. That’s great, but the full potential of connected digital health reaches so much further.

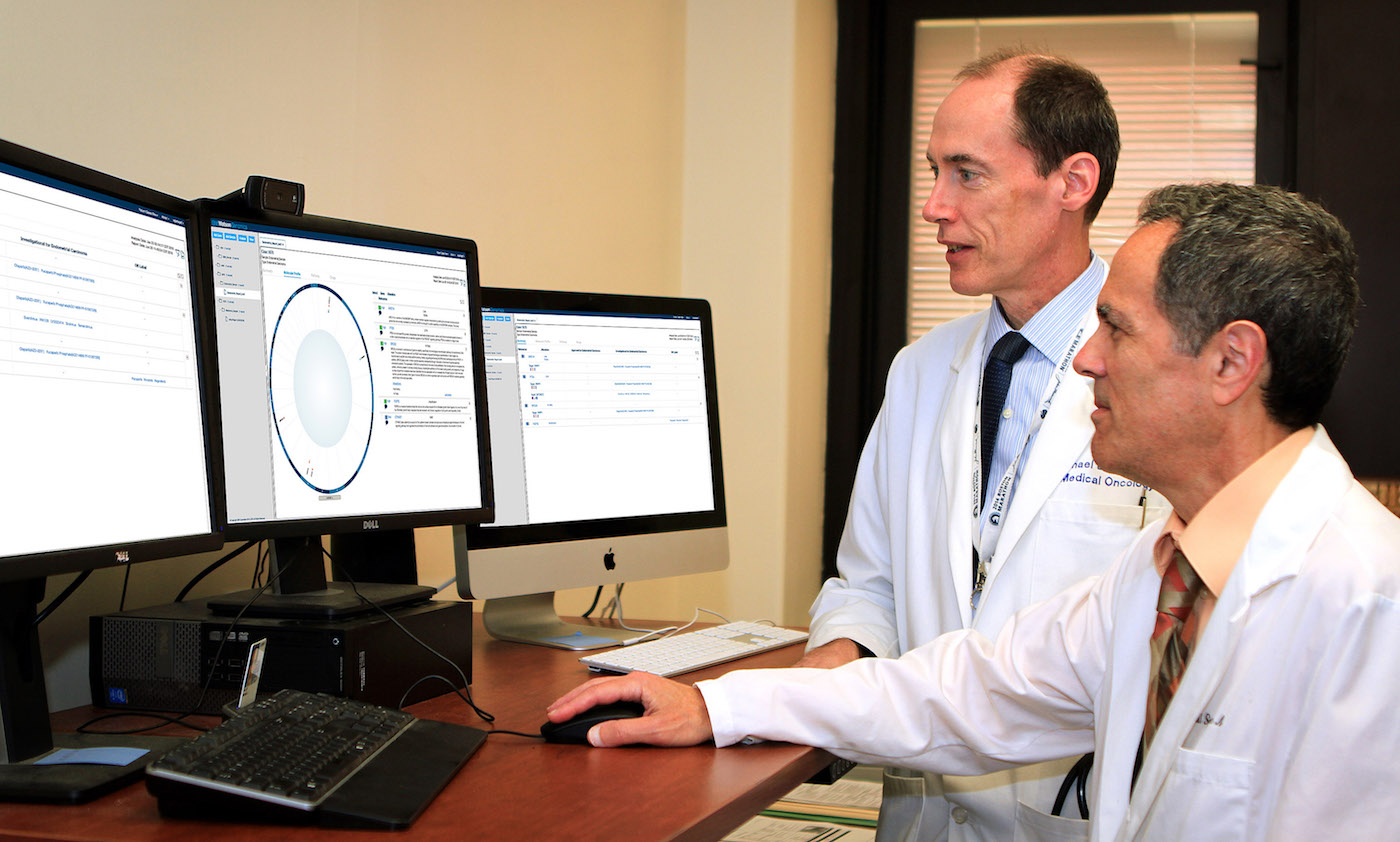

Telemedicine

PatentlyApple has spotted a telemedicine patent filed by Apple. What this does is relatively simple – it gathers all your Health data in one Electronic Patient Health Record that you can share with medical advisors. Similar systems are already in place, such as the Ochsner Hypertension Digital Medicine Program. That’s useful as it means we can in future expect to have meaningful telemedicine sessions with doctors, without needing to visit the doctor. That’s great, but the full potential of connected telemedicine reaches much further. IBM has been developing its Watson AI system, and that system can now diagnose medical problems effectively. This story explains how Watson was able to use a leukemia patient’s medical records to correctly diagnose their condition and recommend treatment doctor’s had been unable to identify. Now imagine if Apple’s patent for patient data is tied up to IBM Health AI. Now you may even be able to consider a 24/7 health plan in which your vital signs were constantly monitored for any sign of problems, and if a health emergency were noted not only would you be able to get help early, but the consequential costs of treatment may be reduced (or driven to hospital by your car). Imagine how this could both reduce healthcare costs, mitigate insurance costs and save lives. And think how these benefits could be extended to impoverished or remote communities worldwide. All they need is the sensors. Sensors will soon even be able to detect cancer.

Disease prevention and control

Twitter can track the spread of disease.

“Adam Sadilek at the University of Rochester in New York and his colleagues used Twitter to follow the spread of flu virus in New York City….Combining this with location data, the team was able to see how the flu was travelling and predict when twitter users would fall ill. It could, perhaps, one day be used to warn people when they’re about to enter an area with a high infection rate.” (New Scientist)

The problem with Twitter is lack of privacy. However, think of how your mobile devices can track so much about you. Now, users on some platforms will be sharing all this information all the time with everybody, but Apple’s use of differential privacy means that your own personal identity will be kept secret even while important information can be shared. So, if your device knows you are sick it can share that you are not well in a completely anonymized way. This data can be shared with other data stacks and analytics systems will – indeed, they already do – be able to figure out if lots of people happen to be ill in an area at the same time. This will enable public health authorities to quickly identify signs of disease or illness outbreaks, and may even enable them to track things down right to the source. This interesting report will show you how mobile phones can help combat malaria.

The profound thing about differential privacy is that such advantages can be realized in the here and now without your personal data even being connected to the situation.

VR for health

I’ve already discussed how VR could enable medical intervention, in terms of surgeons requesting help or advice during a procedure. One great example of this is a new technology from Leica Microsystems that uses “image injection” to display virtual overlays within a surgical microscope to help guide a neurosurgeon’s hands. It was recently used by Dr. Joshua Bederson of Mount Sinai Health System in New York to treat a patient with an aneurysm.

I imagine there will also be potential to enable procedures to take place remotely, using automated on-site systems controlled by remote surgical teams. This kind of “remote telesurgery” should be of some use in public emergency, disaster, conflict zones and to extend help to remote communities. There are already people working on such solutions. Then you have the VR opportunity for training, such as this Maps-based project created by a UK developer.

Not all of these solutions are necessarily iOS based, but Apple has a huge part to play in these developments, and we already know how deeply invested it is in this sector. Apple CEO, Tim Cook, “When you look at most of the solutions, whether it’s devices, or things coming up out of Big Pharma, first and foremost, they are done to get the reimbursement [from an insurance provider]. Not thinking about what helps the patient. So if you don’t care about reimbursement, which we have the privilege of doing, that may even make the smartphone market look small.”