A first look at the Apple Vision Pro user interface and the tech inside

I was curious how we will use Apple’s new Vision Pro devices. I’ve looked into all the available information and have the following insights into the user interface.

It also felt useful to share what we know so far concerning the tech specifications of these systems, which I’ve put together as a list below.

What is the user interface?

Apple has worked to build a user interface (visionOS) that reacts to gesture, eye movement and voice. At present, we don’t know too much about that UI – it will be several months until we really learn it all, but here is what we do know so far:

You navigate visionOS by looking at apps, buttons, and text fields. When you look at an item the app icon bounces so you can see it is active and you can then apply actions.

The actions you can apply that we know of so far include:

- Tap your fingers together to select.

- Gently flick to scroll.

But this goes further with voice. For example, look at the microphone button in a search field to activate it, then dictate text. Siri will open or close apps, play media.

Accessibility tools such as Dwell, Voice or Pointer Control are also supported. The headset also works with the Magic Trackpad and Magic Keyboard.

When building apps for these devices, developers can choose how an app icon or control makes itself known to a user when it is selected. Apple says these can either glow slightly or be highlights in the space.

Porting existing apps to support these systems is as simple as a checkbox in Xcode, Apple explained.

What about the tech specifications for the device?

Here in something like an order are what we know about the tech specifications of these devices.

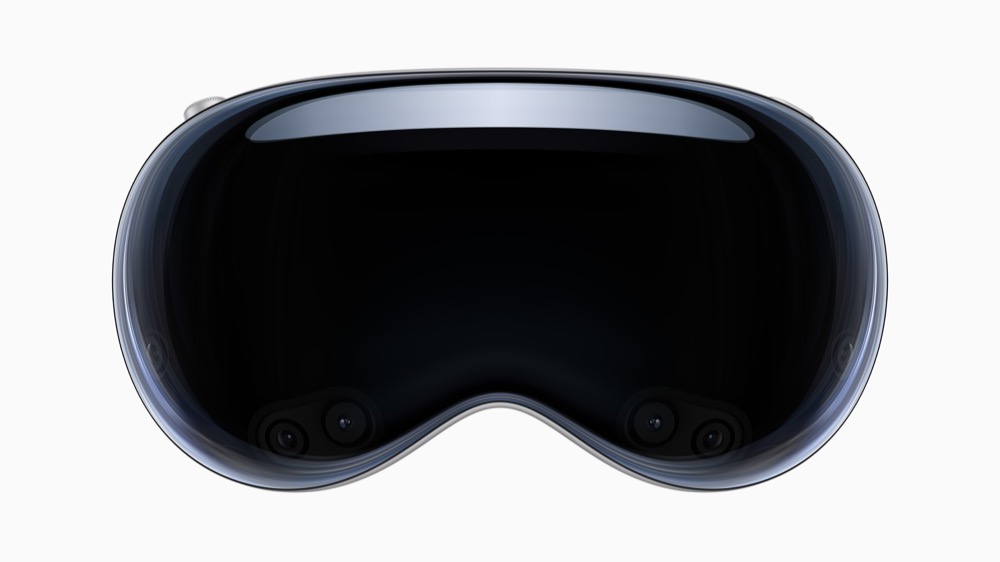

Design

- 3D formed, curved, laminated glass.

- Digital crown

- Body made of custom aluminium alloy.

- The headband can be swapped for your head.

- Has partnered with Zeiss for glasses that magnetically connect to the lens.

Processors

- M2 Apple Silicon chip powers the system

- The R1 chip handles sensor input which attempts to reduce motion sickness. This streams new images to the displays within 12 milliseconds — 8x faster than the blink of an eye.

Now with power pack

Display and cameras

- Displays are micro LED.

- There are 23 million pixels across the two eye lenses. That’s almost triple the number of pixels in a 4K TV.

- Screen feels 100 foot wide.

- Stereo speakers near ears support a tech called audio raytracing for spatial audio.

- 12 cameras, including two that look at the outside world and two pointing town to tack hand movement.

- Inside the device 2xIR cameras.

- Infrared flood illuminators help the device track gesture movements in dark.

- LEDs to track eyes.

- 5 sensors

- 6 microphones.

- LiDAR scanner and True Depth camera work together to create a fused 3D map of your surroundings, enabling Vision Pro to render digital content accurately in your space.

- Optic ID is a new secure authentication system Apple calls more secure than FaceID.

- Thermal system moves air through the system while keeping it cool and quiet.

- External battery pack, with two hour life.

- You can use the device when plugged in.

The price

- Will cost $3.499.

- Available in US next year, elsewhere later.

Please follow me on Mastodon, or join me in the AppleHolic’s bar & grill and Apple Discussions groups on MeWe.