Apple AI team seeks to make Siri voices better and faster

Apple has published fresh AI research which may hint at how it has achieved some of the Siri voice improvements it will unleash with its next generation operating systems.

Finding a way to build voices faster

The Combining Speakers of Multiple Languages to Improve Quality of Neural Voices study details how Apple researchers wanted to find a way to improve voice quality while working with limited sample data.

They considered multiple architectures and training procedures that could be used to develop a multi-speaker and multi-lingual neural TTS system. The big idea is how to improve quality when the available data in the target language is limited and also enable cross-lingual synthesis.

To achieve this they worked with 30 speakers in 8 different languages across 15 different locales. They took language expression in very small speech segments, typically vowels, consonants and punctuation. This information was then fed into a machine learning system that used them to help it create natural sounding language.

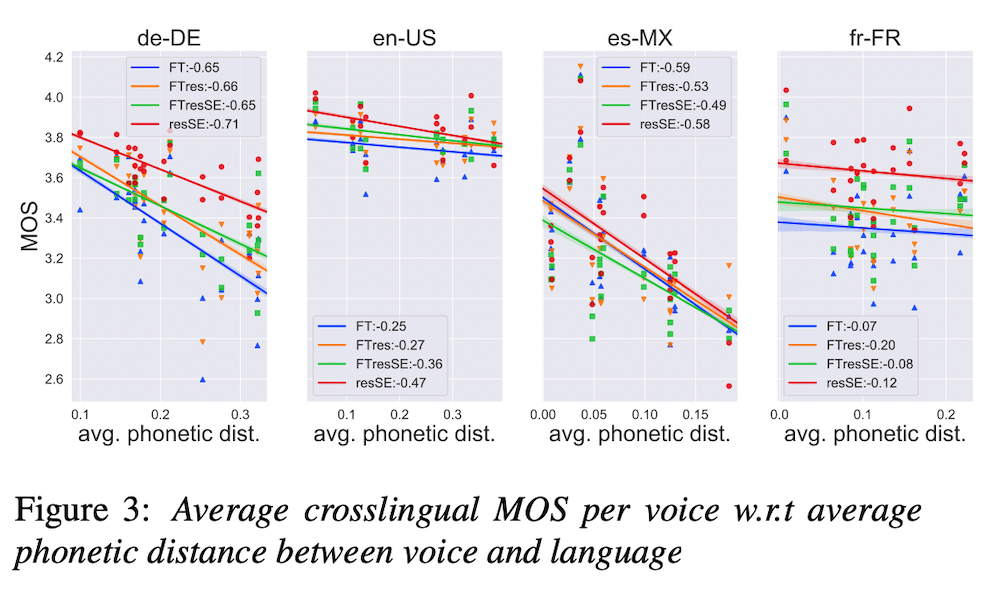

You can tell this is a chart from all the lines

Will this accelerate development?

What they found sounds significant.

“Compared to a single-speaker model, when the suggested system is fine tuned to a speaker, it produces significantly better quality in most of the cases while it only uses less than 40% of the speaker’s data used to build the single-speaker model. In cross-lingual synthesis, on average, the generated quality is within 80% of native single-speaker models, in terms of Mean Opinion Score,” they wrote.

[Also read: 12 Siri commands that work offline in iOS 15 and later]

At the risk of being obvious, this may help accelerate synthetic voice development across the industry. In terms of Apple, it may enable the company to quickly build increasingly genuine-sounding Siri voices and may also empower the addition of more languages and more personality within the service.

The publication of the research follows an earlier paper from Apple that explored how to create a system that allows users to correct speech recognition errors in a virtual assistant by repeating misunderstood words. We’ve all stumbled with a Siri command and then had it say something amusingly irrelevant in response. This may stop that.

Please follow me on Twitter, or join me in the AppleHolic’s bar & grill and Apple Discussions groups on MeWe.