Apple to speak at key AI & ML accessibility technology event

Apple’s WWDC developer microsite has a lovely accessibility touch

It interests me that Apple’s Senior Director of Global Accessibility Policy & Initiatives, Sarah Herrlinger, is to speak at Sight Tech Global conference. I think it reflects that Apple is working toward a far more accessible vision for computing.

Designing for Everyone

Herrlinger spoke at the event in 2020 when she appeared with accessibility engineer Chris Fleizach. This year she’s accompanied by Apple Research Lead, AI/ML Accessibility, Jeffrey Bigham. They will appeal in a session called ‘Designing for Everyone: Accessibility and Machine Learning at Apple’.

They speak as the UN prepares to celebrate International Day of Disability on December 3. Apple marked this with significant accessibility website designs last year.

Herrlinger and Fleizach will discuss: “Apple’s approach to accessible design, advances of the past year, inclusivity in machine learning research and latest approaches and future features.” You can read what was shared last year here.

Sight Tech Global describes itself as the: “First global, virtual conference dedicated to fostering discussion among technology pioneers on how rapid advances in AI and related technologies will fundamentally alter the landscape of assistive technology and accessibility.”

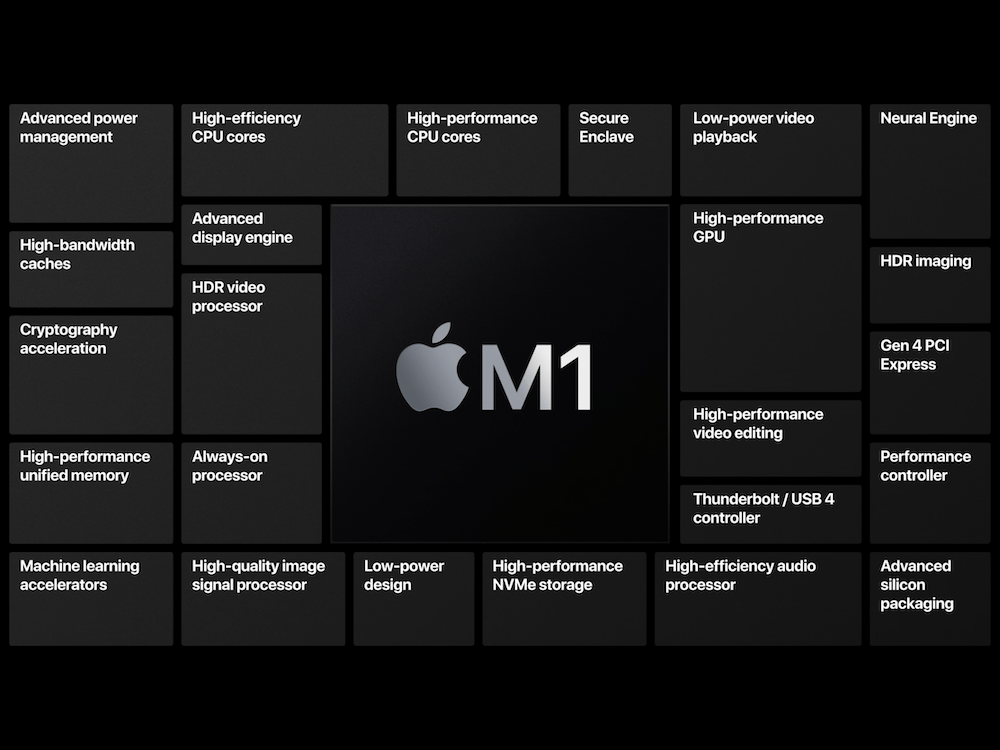

ML on the device

Every human is an augmented human

Under Herrlinger’s watch, Apple continues to weave accessibility solutions deeply inside its products. In doing so, the way those improvements are introduced seems to be dissolving barriers around their use. This contributes to the realization that with help from technology there’s little difference between what humans can achieve.

Bigham, meanwhile, is an associate professor at the School of Computer Science at Carnegie Mellon University. He’s also Apple’s research lead focused on advancing accessibility with AI and ML.

That principle – the augmentation of the human – is an essential one. It is certainly conceptually deeply wrapped up in what some of the best innovators are attempting to achieve in AR and AI. Among other questions, it asks, “What if machines could do the boring tasks so we didn’t have to, or if they could enhance our intelligence or capabilities to help us achieve more?”

Lady with a new iPhone

Designing for everyone

That’s why the name of this keynote sounds so interesting. I think it may provide an interesting glimpse in the (Apple watcher’s) holy grail of “future features”. And I also suspect it will also deliver hints at Apple’s work in how machine vision intelligence can augment daily life.

That’s a notion that makes a great deal of sense, given the conference is all about how tech can enhance sight. But as Apple continues to enhance its user interfaces with gesture-based and machine vision intelligence, we’re getting better insight into its efforts future UI design. Designs which may be deployed in new product families. Particularly the never ever confirmed Apple Glass thing that’s got everybody’s least favorite surveillance capitalists so upset.

Image c/o: Jacinta Iluch Valero/Flickr

What should we expect?

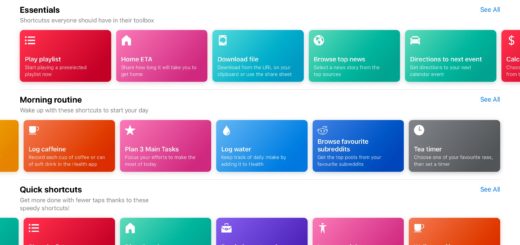

I think we’ll see improvements applied to Apple’s existing tech that lets your iPhone and camera guide you on a walk down the street. I anticipate we’ll see more in terms of object and pose detection and identification. I imagine we’ll see something connecting to LiveText and the capacity to point your camera at a sign to understand it. We may see some work to make Shortcuts more accessible to blind and partially sighted people. But, given every suggestion I just made was just an excited child of my own imagination, we should perhaps wait to find out. I think it will be interesting.

Please follow me on Twitter, or join me in the AppleHolic’s bar & grill and Apple Discussions groups on MeWe.