How to use Apple’s clever Visual Look Up system in iOS 15

Visual Look Up is really rather smart

Visual Look Up is a potentially powerful addition to iOS 15. It uses Apple’s machine learning systems running in the Neural Engine on your device to analyze your Photos and find you more information about them.

What Apple says

Apple says you can use this feature to learn more about popular art, books, landmarks, flowers and pets. The system analyses your images and highlights objects it can recognize. It will then seek out information and useful services.

What it does

It works like this:

- A picture of a piece of art may get contextual information from Wikipedia.

- That photo you took of your pet may get everything that is known about that breed and links to useful websites.

- A snap of a tourist attraction may be augmented with information about that place, Maps and links to more images and information.

- The flower you took a photo of Tuesday will get the Wiki page describing it.

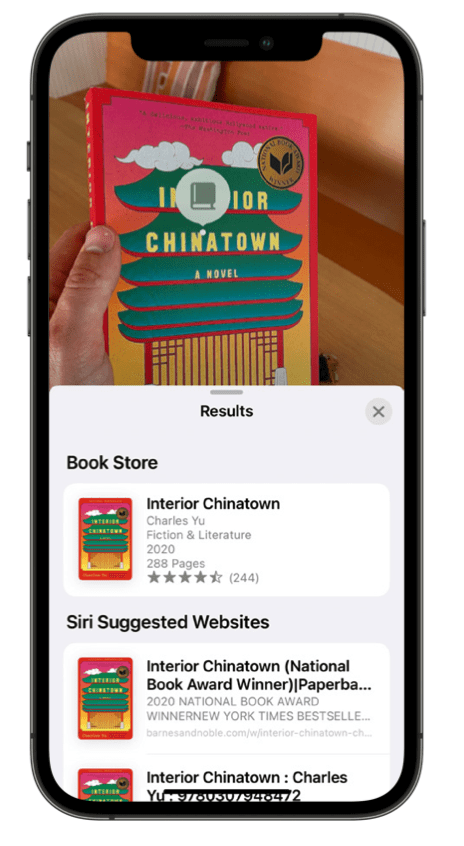

- Take a picture of a book and you may be presented with a link to purchase that title at Apple Books.

It is important to note that Visual Look Up is only available if your region is set to the U.S., for some reason.

I have not tested if it takes you to Apple Music when you photograph a CD cover

How to use Visual Look Up

The feature is simple to use but isn’t always available. At times the machine learning system won’t recognize the image. It works best when images are clear and the main item in that image is easy to understand/clearly defined.

- Open Photos on your iPhone and select the image.

- Look at the small info (i) button at the bottom of the screen.

- If that button has a little star superimposed above it then Visual Look Up has recognized something in the image.

- You should now also see a small icon in the middle of the photo. Tap the icon.

- The Visual Look Up search results will appear.

Search results presently consist of Siri Knowledge (often, but not always, sourced from WikiPedia), similar images found online, Maps, suggested websites, links or books.

What is happening here?

Apple has been putting billions into machine learning and artificial intelligence research, placing a particular focus on machine vision intelligence. This is a technology with multiple implementations, but when it comes to Visual Look Up the system is likely to at least be making use of the following machine learning APIs Apple makes available to developers:

- Image classification, to identify content

- Image saliency to identify the main context of the image – a dog may be on a chair, but this figures out the image is about the dog, not the chair.

- Text detection, for example to recognize the title of a book or place name.

- Face detection—if this is someone you know, Visual Look Up may know them too.

- Animal recognition: Apple at present can identify both dogs and cats. I guess other animals will follow one day.

These are built around CoreML. This suggests that it is becoming possible for developers to build solutions around this recognition. Think about a famous landmark: A tourist authority may choose to build an app containing guidebooks, historical material and other resources for that landmark, including an App Clip. In theory, at least, in the event Apple enables the addition of an App Clip to information about that location held (possibly) in Maps, an iPhone user may be able to grab an image of that location and be swiftly directed to a full-weight app experience relevant to that destination all from within Photos. That sort of thing isn’t yet available, but we do have Maps Guides, which suggests a similar direction of travel.

Even more tips about new Apple features

Want more tips on new tools and settings in iOS 15, iPad OS 15 and Monterey? Here are some we prepared earlier:

- How to use iPad OS 15’s brilliant new multitasking features

- How to translate text everywhere with iPhones, iPads, Macs

- How to change Safari address bar position on iPhone

- How to make Safari tabs great again on iPadOS 15

- How to use Full Keyboard Access in macOS Monterey

- What’s new in Reminders on iPhone, iPad and Mac?

- How to use iCloud Data Recovery on iOS and Mac

- iOS 15: How Apple makes it easier to find stolen iPhones

- What is Apple’s Digital Legacy and how do you use it?

- How to make Safari tabs great again on iPadOS 15

- What to do if Universal Clipboard stops working

- How to change the size of text for specific apps in iOS 15

- 12 Siri commands that work offline in iOS 15 and later

- How to use QuickNotes on iPad and Mac

- How to use Low Power Mode on iPad and Mac

- What’s coming with tvOS 15 on September 20?

Please follow me on Twitter, or join me in the AppleHolic’s bar & grill and Apple Discussions groups on MeWe.