How to use eyes and gestures to control Vision Pro

We continue to collate emerging information concerning how to use a Vision Pro system. This is the most complete account, based on extensive reading and research.

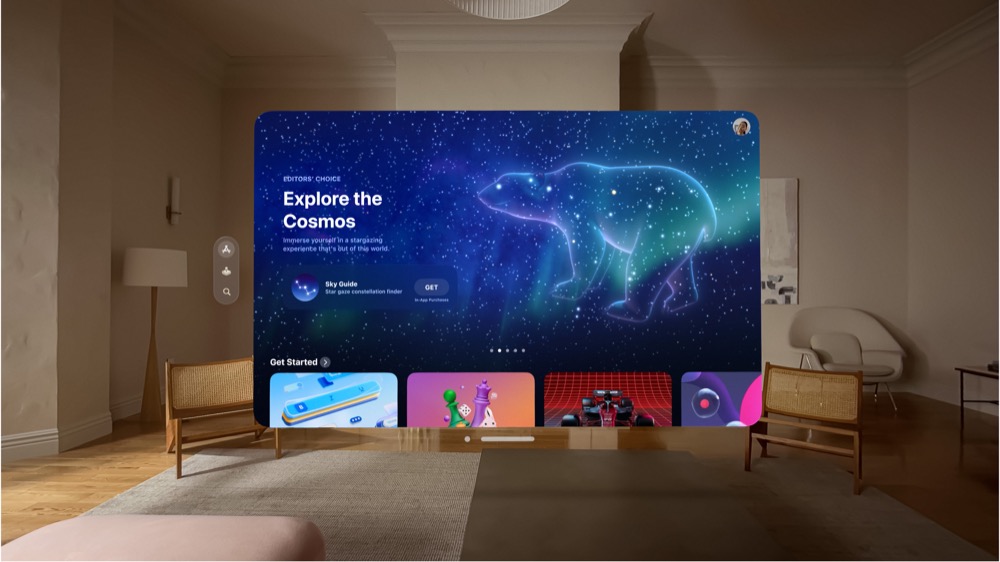

So fluid it’s almost mind controlled

The first thing to think about is the nature of these interactions.

One way to understand things is that just as a mouse and keyboard enabled the Mac, and touch became the primary interface for iPhone; VisionOS sees eyes and hands become the main controllers for your experiences.

To be fair, mice, keyboards and virtual touch continue to be supported. But the primary interface is eye focus with gestures as an important control for selected items.

When building apps, developers can choose how an app icon or control shows itself to a user when selected. Apple says these can either glow slightly or be highlights in the space. Porting existing apps to support these systems is as simple as a checkbox in Xcode.

Let’s talk about Eyes

Eye intention is seen as a “signal of intent”. That means that when you stare at an item it is highlighted using the hover effect.

The cameras inside Vision Pro look at what your eyes are focusing on to determine this. To help navigate, Apple says developers should us shapes, such as circles, pills, and rounded rectangles in the visual canvas for user interfaces.

Once you select an item you can perform gestures with it. If you hold your gaze you can access available contextual menus or additional tooltips about that item.

To search, you look at the microphone glyph in a search field to trigger what Apple calls, “Speak to Search”. Then you speak.

The Dwell Control feature lets you select and use content with your eyes. With this, when you stare at an item you’ll be given a Dwell Control menu to perform actions on it.

There is also Quick Look. The idea here is that you can drag and drop Quick Look compatible content from an app or website into a separate window for other use. That seems to build on the Quick Look feature Apple introduced at WWDC 2020. The latter was presented as a way to let people try items from online retail stores in virtual reality.

QuickLook suggests you may be able to place virtual items in your actual space, and then interact with them spatially. I imagine it will eventually become possible to try clothes on your own avatar. Or a developer will make it possible.

Let’s talk about hands

Hands become the gesture controllers of visionOS.

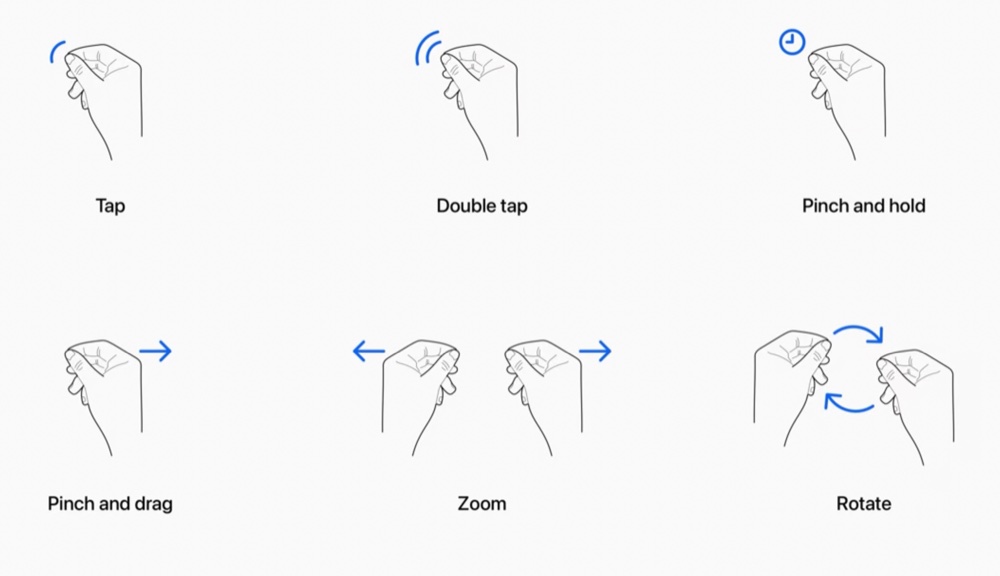

The primary supported gestures in visionOS include:

- Tap –this includes the capacity to tap an application in your Vision and scroll or tap items there.

- Double tap

- Pinch and hold

- Pinching your fingers together is an equivalent of pressing on the screen of your phone.

- Pinch and drag to scroll.

- There are also two handed gestures for Zoom and Rotate.

Developers can also create custom gestures specific to their apps. Apple recommends they be simple to explain, should not conflict with other gestures, and easy to remember.

How Eyes and hands work together

This simple story explains how gestures work together.

Imagine you are staring at a large image. You want to zoom into it to get a closer look at a particular spot, so you quite naturally stare at that spot. That centers the UI on where you are looking. You then raise your two hands in a pinch gesture and pull them apart, to zoom in, or twist them like a steering wheel to Rotate.

You can also use a keyboard and mouse

Vision Pro has a virtual keyboard, though Apple warns using this for long periods may cause strain. For longer sessions you’ll want to use a Magic Keyboard and/or Magic Mouse. You can also connect a game controller.

When you use the Virtual Keyboard, you must keep your hands raised.

- When you first look at the keyboard, you’ll see it is designed to appear as if the keys are slightly raised.

- When you type, you’ll see that as you hover your finger above a button it will glow slightly.

- As you move your finger down toward a button you will see it glow brighter.

- Once you press the button it will appear to be go down and you will hear a click as it is triggered.

- Accessibility tools such as Dwell, Voice or Pointer Control are also supported. The headset also works with the Magic Trackpad and Magic Keyboard.

Please follow me on Mastodon, or join me in the AppleHolic’s bar & grill and Apple Discussions groups on MeWe.