How your HomePod can even hear ‘Hey Siri’ in a noisy room

The journey of sound

Apple’s HomePod isn’t just good at hearing what you tell it because it has excellent microphones inside, but also because it has excellent machine intelligence in there to help it make sense of what you say.

Speech detection and machine learning

The latest post on Apple’s Machine Learning Journal goes into a great deal of deep detail to explain how the company’s AI systems work with the HomePod’s A8 chip to make speech recognition more accurate.

The combination of AI and hardware runs deep.

One of the big problem Apple’s tried to solve in the product is how to make the HomePod good at hearing what people are saying from across the room, or even while music is playing.

The smart speaker system also has to be good at deciphering the sound of someone speaking to it from other background noise, such as televisions or noisy appliances.

According to Apple, the company has developed a bunch of different multi-channel filters that work with the AI to recognise speech that matters and then focusing its attention on the person talking to it.

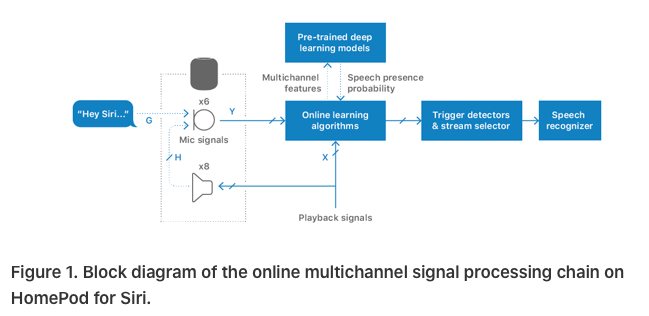

“The Audio Software Engineering and Siri Speech teams built a system that integrates both supervised deep learning models and unsupervised online learning algorithms and that leverages multiple microphone signals,” the company writes.

Machine awareness

What’s interesting about this is that this part of the HomePod’s process uses less than 15 percent of a single core of the A8 processor.

That matters because it leaves the rest of the chip free to do other smart things, such as determining the most optimal sound for the music you are playing.

It is also interesting to note that the HomePod system uses multichannel filtering which “constantly” adapts to changing noise conditions and moving talkers – in other words, the system is always in a state in which it can be ready if someone chooses to speak with it.

[amazon_link asins=’B01MT3URQN’ template=’ProductCarousel’ store=’playlistclub-21′ marketplace=’UK’ link_id=’36da3ca3-f726-11e8-b4e2-a32ce856b918′]

In simple terms, HomePod’s AI will automatically ignore any sounds it knows the HomePod is making, any external sounds it has recognised as non-essential and then to take what is left to enhance it in order to make any commands audible.

This all happens in real-time and sounds like it could also be a useful technology for noise cancelling if used in a slightly different way in headphones.

It’s also quite an interesting thinking point for anyone who wants to figure out how much actual sense can be extracted by AI from a conversation in a loud and crowded room.

Listen to Figure 7

The post also includes some excellent examples of how the sound the device’s intelligence figures out can differ from the sound it hears.

Figure 7 shows how little the system actually does hear when music is playing, and how much more audible the sound becomes once it is processed by the AI, while it doesn’t go into too much detail concerning how Apple’s machine intelligence subsequently processes that sound, the extent to which it identifies a command I couldn’t hear myself is fascinating.