Will the iPhone soon capture 3D AR?

A new Apple patent is generating lots of attention today. It’s interesting in itself, promises some interesting implementations, but may have an impact beyond the obvious as it seems to be a solution that could be used to deliver better depth of field data, which may have implications on AR.

What is the patent?

You can look at the patent here. In brief, it describes a technology which lets you use dual-lens cameras in a brand-new way: It shows the images taken by both cameras simultaneously, allowing the user to choose the image they want to keep. That’s an interesting next step for dual lens tech, given that the existing iPhone 7, 8, 8 Plus and X cameras simply merge both images to create what the device thinks is the best shot.

What’s the abstract of the patent?

What’s the abstract of the patent?

The patent states:

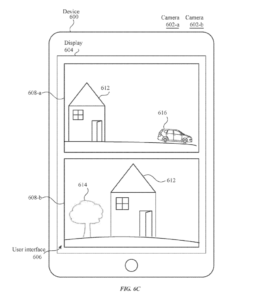

“An electronic device has multiple cameras and displays a digital viewfinder user interface for previewing visual information provided by the cameras. The multiple cameras may have different properties such as focal lengths. When a single digital viewfinder is provided, the user interface allows zooming over a zoom range that includes the respective zoom ranges of both cameras. The zoom setting to determine which camera provides visual information to the viewfinder and which camera is used to capture visual information. The user interface also allows the simultaneous display of content provided by different cameras at the same time. When two digital viewfinders are provided, the user interface allows zooming, freezing, and panning of one digital viewfinder independently of the other. The device allows storing of a composite images and/or videos using both digital viewfinders and corresponding cameras.”

What does it mean when you take a photo?

The way it works seems to indicate that you can zoom and pan each camera independently. This means you could actually use the device to grab two slightly different images – different zooms and so on. I can imagine it being used to take a close up of a person while also capturing their position in a wider context at a time. This also extends to video.

I guess this suggests what I mean:

What about 3D?

There’s nothing in the patent that talks about 3D, but it’s hard to ignore – after all, if it is indeed possible to capture a subject and the context within which that subject sits, then it must surely be possible for software to deduce things like distance, space, and all the other elements that make for effective depth mapping within a captured image.

You already see things like this when you open Photoshop to mess around with your snaps or use the heal tool to take things out of an image.

The difference here seems to me that you now have a tech that enables one to grab two images of the same subject at the same time, potentially unlocking depth of field information that I imagine could be used in AR– particularly if shooting video.

could go one stage further when you think about the speculation that’s gone round recently over a triple-lens camera in a future iPhone and Apple’s 2017 patent on light field technology. It is also interesting that the patent makes some interesting descriptions of how it relates to device sensors, accelerometers and the like — think how the tech could also open up gesture-based virtual interfaces.

Which rather reminds me of…

https://youtu.be/PJqbivkm0Ms

Will this happen?

Only Apple knows if the patent relates to anything we might see.

The company files hundreds of patents every year and only a small number of these make it into shipping products.

All the same, Apple’s general pattern shows it is committed to creating not just AR consumption technologies, but also AR creation tools – just look at the magic you can already create in 3D space when working inside Final Cut, for example.

I guess I’m calling it inevitable that Apple will want not only to let you access AR experiences when using an iPhone, but also wants to empower you to create them – particularly as we move to collaborative communications, AR in Facetime and all the other anticipated ways in which AR will weave itself into our future lives.

Please let me know what you think, as I cannot claim to be an expert on AR/VR image capture.

What’s the abstract of the patent?

What’s the abstract of the patent?